“Metrics that are not valid are dangerous”

– One of the principles of Context-Driven Testing In Action

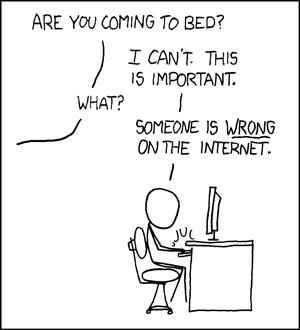

Over the weekend, I got in another silly argument over the internet. Martin Burns referenced a Rally whitepaper, claiming that teams that used task hours and story points had 250% more quality than teams doing #NoEstimates.

At first I thought it was a joke; I imagined an announcer saying “Now with two hundred and fifty percent more quality!” — and, I admit, that did not help things.

The conversation went downhill from there.

Our colleague Justin Rohrman, the president of the the Association for Software Testing and an authority on software quality metrics, generated at least one good thing out of the twitter conversation, a blog post on bugs found after release to production as a measure of quality.

Today, I’d like to do a follow up, in my folksy, imprecise, humanistic way, without using a half-dozen words that end in -ity, -ize, and -ion and without starting from the defining the words I will later use to define the words that I use abstractly to logically prove that …

Nope. I’m gonna talk about one thing, if a metric is valid.

And I’m going to do it from a casino.

Valid Measures At The Poker Table

The great player, Sherlock Hemlock, is going to play at a private gambling house off the french Riviera. He asked Mr. Watson, his assistant, to find the highest price table. After ten minutes of research, Mr. Watson returns and says “No problem boss! I counted, and the table on the left has way more chips!”

Wait.

Is that right?

Assuming the chips can have different face values … Mr. Watson is missing something, isn’t he?

If you want to count things, you need to look at the dollar value of chips, not just the number. Counting just the number is not a valid measurement, at least not for the purpose. If we wanted to know the weight of the chips, and they were all the same weight, counting might work just fine.

For finding the highest table? Not so much. That metric is not valid. Worse, counting chips could actually lead us to a worse decision, than, say, looking at the clothing of the players and suggesting the table that seemed to be more fashionable.

The example above is a picture. It is simple, perhaps too simple. Counting things that vary and ignoring the variance is one way to make measures invalid, it is not the only one. For today, I wanted to talk about the problem of missing an important variable, which leads to an invalid measure.

One More Example

When I first met Damian Synadinos, we had an argument. That’s okay. We’re adults. Damian said Scrum could never work. I was offended, because I had seen it work, and work better than what we had before on large waterfall-y and chaos-driven projects.

We kept talking with an air of respect. Today, I think it’s more fair to interpret Damian’s words as “Scrum [as I have seen it and had it explained to me] does not work [as well as people claim.]”

Scrum is an abstract concept. What people actually do can be better or worse.

Imagine someone taking a survey of the number of bugs of people “doing Scrum”, knowing some teams were like what Damian was doing a few years ago, and some like my experience, and plenty more in between. Would the results be meaningful?

To get to meaningful data, we need to get a lot more precise. And be careful. I may blog a bit next month about valid measures, and how to recognize them.

For today, if you find yourself in a casino …

… don’t place a bet until you know what is actually going on.

Thanks Matt

I think where we left it in our private discussion was: the PDF alone doesn’t have enough data to be absolutely positive whether the metric is valid or not.

Now without wanting to Appeal To Authority, I will (and privately with you already did!) say that the research was carried out by reputable people: Larry Maccherone and SEI at Carnegie-Mellon, and there are plenty of caveats in the paper. My own inclination would be therefore to assume honest intent on the part of the authors (unlike the shouty “SNAKE OIL!” people over the weekend, many of whom started shouting long before opening the link if ever they did). Shouting aside, YMMV of course.

Thankyou also for recognising that my original tweet was space constrained (and in context, only a “in passing, here’s something interesting” rather than a forensic claim put forward as a killer argument).

It is good to see someone engaging with the data, and if I had the original dataset (and permission to distribute), it’s certainly something I’d like to pursue. Right now, however, for all its potential flaws, it’s still a more robust analysis than almost anything else out there – certainly it goes beyond self-reported data (per Ambler and V1) from large numbers of sources, which in itself is better than “I found with my team…”

I’d like to see more data published so we can go beyond unsupported opinion -v- Eppur se muove arguments, although I suspect there is more noise and complexity in reality than any of us would like.

[…] Valid Measures for Mere Humans – Matt Heusser – https://xndev.mystagingwebsite.com/2016/03/construct-validity-for-mere-humans/ […]